Forced vs unforced: which should be used?

After the object detections in multiple filters are merged, the measurement algorithms are run for each filter separately allowing object centroids and shapes to vary by a small amount. These unforced measurements are suited for studies of object properties in a specific filter, e.g., (approximate) total magnitude of an object in a given filter. For colors, however, it is better to use the forced measurements in which common object centroids and shape parameters are used in all the filters.

Which photometry should be used, PSF, Kron, or cModel?

If you are interested in colors of objects, it is good to use photometry that explicitly takes into account the PSF in the measurement such as PSF photometry and CModel photometry because each filter has a different seeing size in the coadds (PSF is not equalized between the filters in the coadds). For point sources, PSF photometry is the method of choice. For extended sources, one can use CModel. If you are not sure if your objects are extended, or if you work on both compact and extended sources, CModel is the best choice. CModel asymptotically approaches PSF photometry for compact sources and can be used for both compact and extended sources.

However, the deblender tends to fail in crowded areas. The deblender failure affects the photometry and you are advised to use the PSF-matched aperture photometry on undeblended (parent) images (undeblended_convolvedflux) in such regions. It gives good colors even for isolated objects, and thus it is a good alternative to CModel. However, it is missing light outside the aperture and should not be used for total magnitudes. Also, it is advised to use a small aperture to minimize effects of object blending.

If you are interested in measurements in a single-band, Kron photometry is a well-tested method to capture a large fraction of object light. PSF (for point sources) and CModel (for both point and extended sources) photometry can also be used, of course. We note that it is notoriously difficult to measure total magnitudes of objects and we are missing a fraction of light even with Kron and CModel.

Which band is used as a reference band in the forced photometry?

The pipeline paper gives the full algorithmic details of the reference filter selection, but the priority is given in the following filter order: i, r, z, y, g, NB0921, NB0816, NB0387. As a result, the i-band is the reference filter for most objects. The following database columns tell you in which filter your object is detected and which filter is used as the reference filter.

merge_peak_{band_name}: if set to true, a source is detected in this band

merge_measurement_{band_name}: if set to true, this band is used as the reference band

How to select objects with clean photometry

You should first select primary objects (i.e., objects in the inner patch and inner tract with no children) by applying isprimary=True. It will be a good practice to then apply pixel flags to make sure that objects do not suffer from problematic pixels; {filter}_pixelflags_saturated_{any, center}, {filter}_pixelflags_interpolated_{any,center}, etc, except for the UD region (see the Known Problems page for details). There are two separate pixel flags depending on which part of objects is concerned. Those with ‘any‘ are set True when any pixel of the object’s footprint are affected. Those with ‘center‘ are set True when any of the central 3×3 pixels are affected. For most cases, the latter should be fine as problematic pixels are interpolated reasonably well in the outer part. Finally, one may want to make sure that the object centroiding is OK with {filter}_sdsscentroid_flags (if the centroiding is bad, photometry is likely bad), but faint objects tend to have these flags on. One should check if scientific results are sensitive to this flag. It is also important to make sure that the photometry is OK using the flags associated with the measurement such as {filter}_psfflux_flags.

How to separate stars from galaxies

An easy way to achieve reasonable star/galaxy separation is to use {filter}_extendedness_value. This parameter is based on a magnitude difference between PSF and CModel photometry. For compact sources, CModel approaches PSF photometry asymptotically and the magnitude difference becomes small. The extendedness parameter is set to 0 when the difference is sufficiently small, and 1 otherwise. This page illustrates the star/galaxy classification accuracy of this parameter under a range of seeing conditions.

How to measure the size of an object

It depends strongly on how you define the size of an object, but we have computed Gaussan-weighted 2nd-order moment for each object and that will be useful in many cases. The moment is stored as {filter}_sdssshape_shape{11,22,12} in the database. The moment for the PSF model at the object’s position can be found as {filter}_sdssshape_psf_shape{11,22,12}. You can first compute the determinant radius as r_det= (shape11 * shape22 – shape12^2) ^ 0.25. Under the assumption of Gaussian, one can convert the radius to FWHM by applying 2 * sqrt( 2 * ln 2).

How to get a PSF model for my object?

The PSF information is stored in the binary tables attached to the image files such as calexp. You can generate a PSF image at an arbitrary position with hscPipe, but we recommend to use PSF picker. You can upload a coordinate list and you will get model PSFs at the given positions in the fits format.

What is a sky object?

We perform various measurements on objects such as flux measurements and they are what you use for science. In addition to real objects, we perform the same measurements on the blank sky, where there is no object. By default, the pipeline picks 100 random points in a patch outside of object footprints and make the blank-sky measurements. They are called sky objects and are very useful for measuring, e.g., the background fluctuation and background residual. The sky objects are stored in the database just like real objects and they have merge_peak_sky = True. In Deep+UltraDeep fields, a patch may not have full 100 sky objects because there are so many objects that the pipeline cannot find space to put the sky objects. Less than 100 sky objects are included in such patches. The sky objects come with various measurement flags, but some of them are not meaningful and should not be applied. E.g., the centroid flag does not mean anything for sky objects and should be ignored.

Stitching images together

We split our survey fields into tracts, each of which is about 3 square degrees. A tract is further divided into 9×9 patches and the measurements are performed on each patch separately in order to parallelize the processing. The coadd images are available only for each patch, which is 4K x 4K in size.

If you would like to work on a larger image (e.g., tract image), you can use image stitcher #1 to combine patch images together. You do not need to install the pipeline to run this code, but you do need a local copy of the patch images. Image stitcher #1 cannot stitch patches in different tracts together.

Image stitcher #2 can stitch patches from different tracts. This can also be used to stitch CCD images (CORR images) together to make a full field-of view image. The HSC (or LSST) pipeline has to be installed to use this tool. The overlapping regions between the adjacent tracts are not exactly the same; tract A may have slightly different DNs (counts) and astrometry from tract B in the overlapping region. In the resultant image, this tool simply adopts the pixels of the later tract B in the arguments.

How to install the processing pipeline

Pre-built binary packages for CentOS 6 and 7 are available on this page. They likely work on other redhat-based distributions, but we did not test (and we do not support). There is also a build script, which one may try if the binary packages do not work.

Is there any easy way to know the depth and seeing around my objects?

Yes! The easiest way will be to look at the QA plots and you can find the seeing and depth of the filed you are interested in. You can also use the patch_qa table, which gives the average 5 sigma limiting magnitudes and PSF FWHM in arcsec for each patch and for each filter. If you would like know the PSF sizes at the positions of your objects, refer to “How to measure the size of an object” above.

I need the system response functions of HSC

You can go to the survey page and look for the figure summarizing various transmission/response functions. For your convenience, the total system responses are here.

How can I retrieve an effective response curve for my object?

A coadd image now carries information about effective filter response for each object. The following sample script shows you how to retrieve an effective response curve for a given object. You will need to have the pipeline installed.

from lsst.daf.persistence import Butler

import numpy as np

butler = Butler("/path/to/rerun")

# replace data IDs below with the desired tract/patch/filter, of course

coadd = butler.get("deepCoadd_calexp", tract=9615, patch="4,4", filter="HSC-I")

catalog = butler.get("deepCoadd_meas", tract=9615, patch="4,4", filter="HSC-I")

obj = catalog[4000] # some random object

wavelengths = np.arange(6500, 9000, 10, dtype=float) # wavelengths to sample at in Angstroms

transmission = coadd.getInfo().getTransmissionCurve().sampleAt(obj.getCentroid(), wavelengths)

I cannot view/read fits image files. Are they corrupted?

We hope not! Lossless compression has been applied to images since PDR2. Because of this, you need a recent version of image viewer such as ds9 to display an image. For the same reason, you need a recent version of I/O libraries to read images.

Where can I find the shape catalog from PDR1?

The shape catalog has been loaded to the database under the PDR1 schema (not under PDR2!) because of the similarity of the catalog structure to PDR1. Look for s16a_wide.weaklensing_hsm_regauss. The shape catalog is based on the S16A internal data release, which is processed with the same version of hscPipe as PDR1. Be sure to explicitly specify the schema in the online/offline SQL tools when you query the database. Details of the catalog can be found in this page. If you would like to retrieve flat files from S16A, be sure to follow the right links from the Data Access page. hscMap also can display images from S16A by selecting S16A and unselecting S18A from the dataset menu.

Note that shapes from PDR2 are withheld and we do not plan to release them because they turn out to be problematic (see Section 2.3 of the PDR3 paper). Instead, we plan to release a shape catalog from an internal S19A release once fully validate the shape measurements. The shapes from PDR3 are also withheld and we do not yet have a release schedule for them.

For advanced users: butler access to catalog and image products

butler is a useful interface to load various types of image and catalog data. To load the data, you will only need to specify the data directory and a pair of visit+ccd for the CCD data and tract+patch for coadds. The following script will give you a sense of how to use butler. It reads an image and display it in ds9. Note that you need to have the pipeline installed in order to use butler.

In this example, target is set to ‘calexp’ to load CORR-.fits. target is defined as follows:

| For the following files, specify visit + ccd | ||

| Target | Data Form | Data Type |

| bias | Bias data | ExposureF |

| dark | Dark data | ExposureF |

| flat | Flat data | ExposureF |

| fringe | Fringe data | ExposureF |

| postISRCCD | post processing data (not created in default setting) | ExposureF |

| calexp | sky subtracted data | ExposureF |

| psf | PSF used in analysis | psf |

| src | object catalog made from detrended data | SourceCatalog |

| wcs, frc | object catalog used in mosaic.py | ExposureI |

| Specify tract + patch for the followings | |

| Target | Data Form |

| deepCoadd_calexp | coadd data |

| deepCoadd_psf | PSF of coadd image |

| deepCoadd_src | catalog made from coadd data |

Credit and permission of using materials on HSC web

Materials on this website, including images from hscMap, can be used without prior permission within the following scopes.

- Extent of free use stipulated by Japanese copyright law (private use, educational use, news reporting, etc.)

- Usage in academic research, education, and learning activities

- Usage by news organizations

- Usage in printed media

- Usage in websites and social networks

In all the cases, please explicitly include the credit, “NAOJ / HSC Collaboration“.

See also the following web page about “Guide to Using NAOJ Website Copyrighted Materials” for details.

https://www.nao.ac.jp/en/terms/copyright.html (in English)

https://www.nao.ac.jp/terms/copyright.html (in Japanese)

How to get area (size) of each survey field

The survey area can be calculated by meta information stored in database tables. There are a few ways to estimate the area, but one simple way would be to count the number of HEALPix indices covered by objects:

WITH mosaic_hpx11_list AS (

SELECT distinct hpx11_id

FROM pdr3_dud.mosaic_hpx11

WHERE skymap_id IN (

SELECT distinct skymap_id

FROM pdr3_dud.forced

-- WHERE your constraints here...

)

)

SELECT COUNT(hpx11_id) * (40000. / (12 * 4^11)) AS area_deg2

FROM mosaic_hpx11_list;

- 40000 : square degrees of the whole sky

- 12 × 411 : the number of HEALPix pixels in the whole sky

You can also use the random points for the area estimate. See the random points page.

Can I use object IDs to match objects from different survey layers?

NO! Due to the way we generate object IDs, the same objects have different object IDs in different survey layers (Wide and Deep+UltraDeep). If you want to make a merged catalog of objects from Wide and Deep+UltraDeep, you will need to do object matching by position.

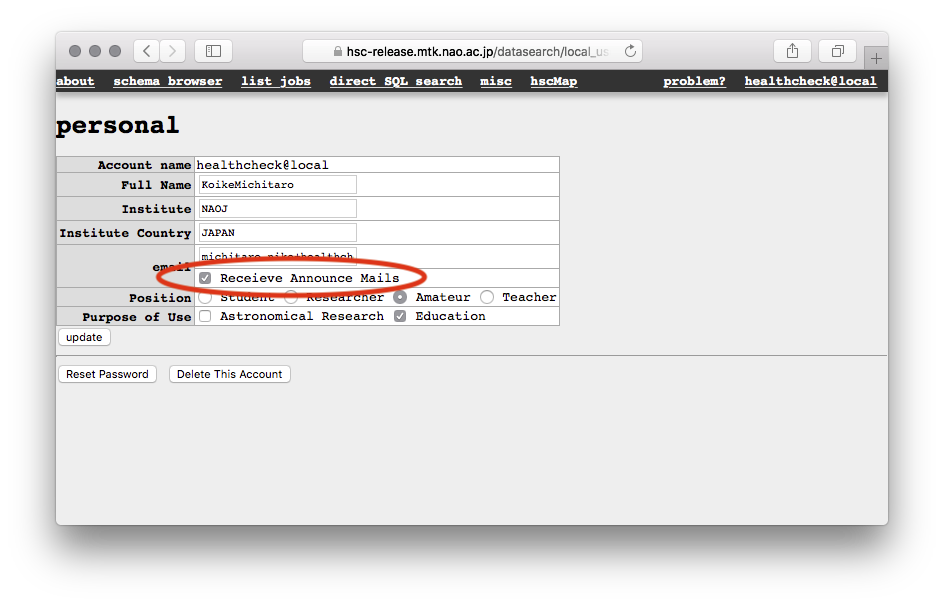

I do not want to receive email notifications

You can visit the CAS search page first at https://hsc-release.mtk.nao.ac.jp/datasearch/, then go to your personal setting page located at the top-right on the menu tab. You will see a form like the following image. Please remove the check on the “Receive Announce Mails”.

Where can I get the raw data?

NEW: I understand there are two types of coadds: local vs. global sky subtraction. Where can I find them?

A patch image named x,y.fits under the deepCoadd/ directory is the global sky subtraction applied. If you are interested in extended wings of objects, this is the image you want to look at. There is no corresponding catalog products from this image. calexp...fits under deepCoadd-results/ are local sky subtraction applied and are used for detection and measurements. In other words, all the objects in the forced and meas tables at the database are from this image.

NEW: What is the photometric zero-point of coadd?

It is 27.0 mag / DN. However, this is not accurate at a few percent level because aperture corrections, which accounts for missing light from the aperture used for photometric calibration, cannot be applied at the image level. A few percent uncertainty may or may not matter for your science, but this should be kept in mind.

This page does not answer my question. What should I do?

We are sorry about that. Please send your question to hsc_pdr@hsc-software.mtk.nao.ac.jp and we will work on it.